Controlling Synthesizers with Your Webcam (and Computer Vision)

Table of Contents

(Find the project on GitHub: https://github.com/justindachille/CamMIDI)

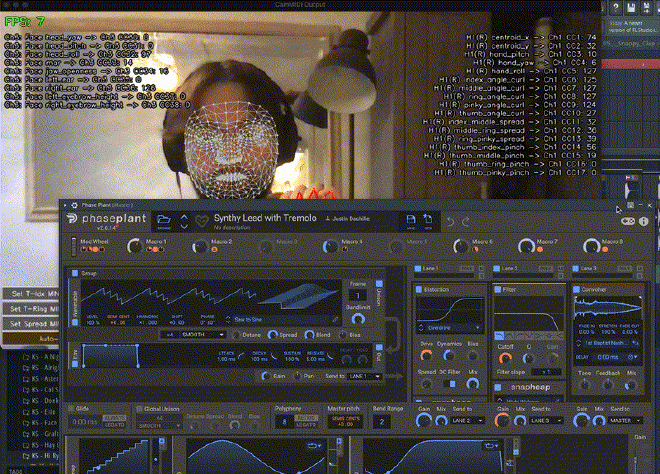

Motivated by a lack of physical hardware and seeking more expressive control over my music than a mouse affords, I leveraged my computer vision knowledge to develop CamMIDI1. This project transforms a standard webcam into a nuanced MIDI controller.

What is CamMIDI? #

CamMIDI uses Google’s AI framework MediaPipe2 with OpenCV to perform real-time hand and face tracking, and converts your movements into a rich set of data points. These are then translated into MIDI messages using a virtual MIDI cable. This approach offers users complete flexibility in routing and utilizing the control information in any preferred software.

Key Features #

The system can track up to two hands and one face. CamMIDI offers a multitude of parameters to track. Some illustrative examples include:

- Hands: Individual finger curl angles, pinch distances between the thumb and each fingertip, 2D screen position (X/Y), and overall hand orientation (Pitch/Yaw/Roll).

- Face: Mouth openness (MAR and Jaw Openness), eye openness/blink detection (EAR), head pose (Pitch/Yaw/Roll), and eyebrow height.

Calibration #

This software also includes manual calibration and automatic calibration. I tried to normalize all the parameters to be invariant on distance by normalizing by hand size, but it is not perfect - some features such as finger spreads can be very sensitive to distance even after calibration.

Some features are handy because they do not require calibration. For example, finger curls are as simple as a 0 to 180 degree angle.

Don’t forget to hit the “Save Config” button (or press ’s’) to store your calibrated ranges!

Comparisons to Existing Solutions #

After completing the project, I did some research on solutions that already existed, a step that would normally come before development, but I had a clear picture of what I wanted to build. Still, I knew that there must be other attempts at doing something like this.

Compared to Imogen Heap’s MiMU gloves 3, which cost thousands of dollars (although they are very cool, and of course offer better flexibility/stability than this project!), this code is available for free. This project also includes built in calibration, and many more tracked features than similar projects4 which also uses Google’s MediaPipe2

Getting Started #

- Clone the repo:

git clone https://github.com/justindachille/CamMIDI.git - Install dependencies:

pip install -r requirements.txt(using a virtual environment is recommended). - Set up a Virtual MIDI Port: (e.g., IAC Driver on macOS, loopMIDI on Windows).

- Configure

config.yaml: Set yourmidi.port_name. - Run:

python main.py - Connect & Calibrate: Point your target software to the virtual MIDI port and use the CamMIDI GUI to calibrate.

Check the README on GitHub for detailed setup and usage instructions.

Conclusion #

My dream for this project is to see it utilized by individuals seeking novel ways to interact with digital creative tools. Unfortunately, it is not robust enough for live performances due to sensitivity to movement and lighting, it excels in controlled settings such as home studios. I’m excited to see how far people can take this!